Adventures in Salesforce and DataWeave (Beta) Part 3

So we have looked at two areas in this set of blog posts:

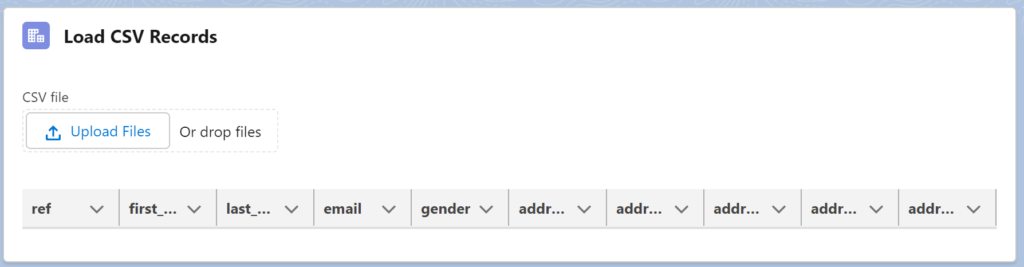

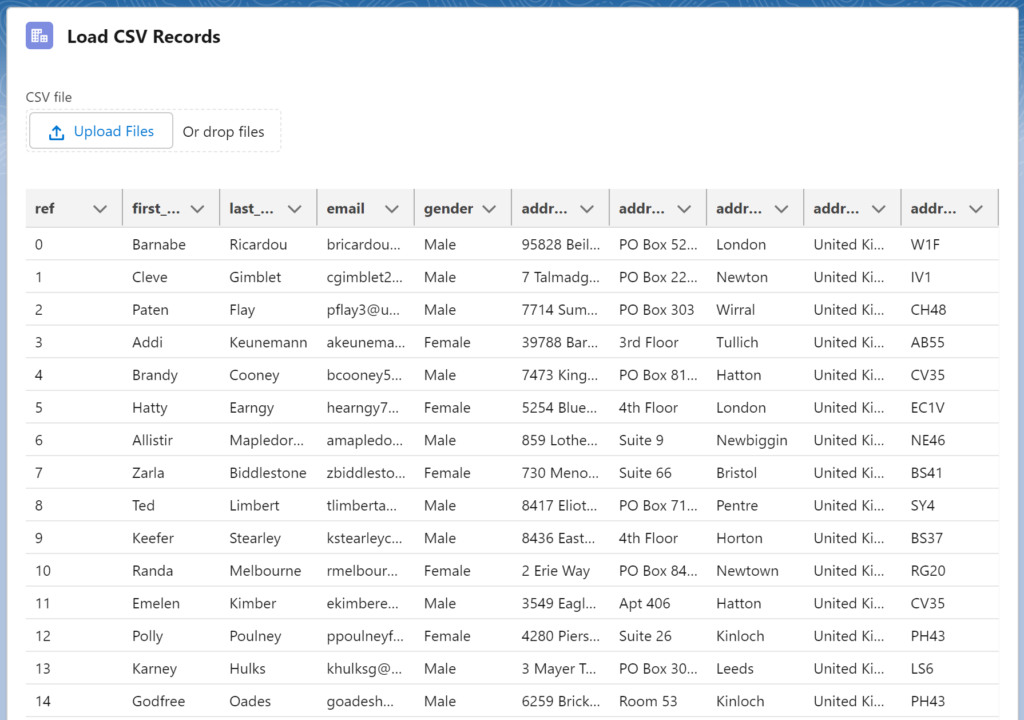

In this post we will start to look at how we can read a csv file with the ultimate challenge on inserting contacts and associated accounts from a csv list. However to start we will look at how we can upload a csv and then use some apex to convert to JSON to show in a DataTable. This could be done client side but we will use an apex so that when we do the next stage we have the apex to build on.

Lets set up a simple LWC called ‘DataWeaveCSV’ that will have an upload input and a datatable:

<template>

<lightning-card title="Load CSV Records" icon-name="standard:account">

<div class="slds-p-around_medium">

<lightning-input

type="file"

label="CSV file"

multiple="false"

accept=".csv"

onchange={handleInputChange}

></lightning-input>

</div>

<template if:true={data}>

<div class="slds-p-around_medium">

<lightning-datatable

key-field="ref"

hide-checkbox-column

data={data}

columns={columns}

></lightning-datatable>

</div>

</template>

</lightning-card>

</template>The js file will be:

import { LightningElement,track } from 'lwc';

import { ShowToastEvent } from "lightning/platformShowToastEvent";

import loadCSV from "@salesforce/apex/DataWeaveXmlToJSON.loadCSV";

const columns = [

{ label: "ref", fieldName: "ref", type: "text", editable: false },

{ label: "first_name", fieldName: "first_name", type: "text", editable: false },

{ label: "last_name", fieldName: "last_name", type: "text", editable: false },

{ label: "email", fieldName: "email", type: "text", editable: false },

{ label: "gender", fieldName: "gender", type: "text", editable: false },

{ label: "address_line_1", fieldName: "address_line_1", type: "text", editable: false },

{ label: "address_line_2", fieldName: "address_line_2", type: "text", editable: false },

{ label: "address_line_3", fieldName: "address_line_3", type: "text", editable: false },

{ label: "address_line_4", fieldName: "address_line_4", type: "text", editable: false },

{ label: "address_line_5", fieldName: "address_line_5", type: "text", editable: false },

];

export default class DataWeaveCSV extends LightningElement {

columns = columns;

@track data = [];

handleInputChange(event) {

const file = event.target.files[0];

const reader = new FileReader();

reader.addEventListener(

"load",

() => {

this.uploadCSV(reader.result);

},

false

);

if (file) {

reader.readAsText(file);

}

}

uploadCSV(data) {

loadCSV({ records: data })

.then((data) => {

this.data = JSON.parse(data);

this.dispatchEvent(

new ShowToastEvent({

title: "Success",

message: "Records Found",

variant: "success"

})

);

})

.catch((error) => {

this.dispatchEvent(

new ShowToastEvent({

title: "Error",

message: error.body.exceptionType + " : " + error.body.message,

variant: "error"

})

);

});

}

}and finally the meta file

<?xml version="1.0" encoding="UTF-8"?>

<LightningComponentBundle xmlns="http://soap.sforce.com/2006/04/metadata">

<apiVersion>56.0</apiVersion>

<isExposed>true</isExposed>

<targets>

<target>lightning__HomePage</target>

</targets>

</LightningComponentBundle>It is the main dataWeaveCSV.js where the fun is. But lets have a look at the data that we are importing first. Getting some mock data to upload is fairly easy and I have a csv file that looks like this:

This is stored as a csv file has field names for the first row.

In our js file we set up a handler for the onchange event on the file upload component. So we have

<lightning-input

type="file"

label="CSV file"

multiple="false"

accept=".csv"

onchange={handleInputChange}

></lightning-input>

handleInputChange(event) {

const file = event.target.files[0];

const reader = new FileReader();

reader.addEventListener(

"load",

() => {

this.uploadCSV(reader.result);

},

false

);

if (file) {

reader.readAsText(file);

}

}When we get a file uploaded the handleInputChange gets processed. We can the get the details of the file and then create a FileReader object which we attach on event listener which looks for the load event so that we can pass the result of the reader (the actual contents of the file) in the uploadCSV.

Out uploadCSV is also fairly simple (the toast messages to the user taking up the most code):

uploadCSV(data) {

loadCSV({ records: data })

.then((data) => {

this.data = JSON.parse(data);

this.dispatchEvent(

new ShowToastEvent({

title: "Success",

message: "Records Found",

variant: "success"

})

);

})

.catch((error) => {

this.dispatchEvent(

new ShowToastEvent({

title: "Error",

message: error.body.exceptionType + " : " + error.body.message,

variant: "error"

})

);

});

}We are simply calling the loadCSV apex code and passing in a variable called records with the data passed into the function (as a string). We will get back a string which will be in a JSON format so we simply parse it with JSON.parse and then set our DataTable to show the data. We have set up the DataTable to show the columns of the return JSON data.

The apex code is when the DataWeve is. Firstly we will need a new DataWeave .dwl file in our dw directory. Lets call this CSVtoJSON.dwl and this will be the file below. We are defining an input called data which is CSV (the first row will be the header fields) and that we are outputting a json file back. At this stage we are simply going to have map call which will receive each row as an item and also pass in the index of the row. We can use the index to create a unique reference with ref: index and then we for the time being we can simply pass each field back to as is – so we get a pattern fieldname: item.fieldname. This allows us to change field names and we could modify the data as we are passing it back.

%dw 2.0

input data application/csv

output application/json

---

data map (item, index) -> {

ref: index,

first_name: item.first_name,

last_name: item.last_name,

email: item.email,

gender: item.gender,

address_line_1: item.address_line_1,

address_line_2: item.address_line_2,

address_line_3: item.address_line_3,

address_line_4: item.address_line_4,

address_line_5: item.address_line_5,

}and our apex code is fairly straight forward, we get passed our records (from the LWC so the string of csv records) and then we load our DataWeave script from the file above (called CSVtoJSON) and the simply execute the script passing our input in as a map of ‘data’ and the records. We get pack a string which we pass back to the lwc with the return result.getValueAsString() :

@AuraEnabled(cacheable=true)

public static string loadCSV(string records) {

Dataweave.Script dwscript = DataWeave.Script.createScript('CSVtoJSON');

DataWeave.Result result = dwscript.execute(

new Map<String, Object>{ 'data' => records }

);

return result.getValueAsString();

}our current project (including the first 2 posts) now looks like this:

So the lwc component looks like this:

And then when upload our csv file we get:

Again performance is not great, with 90 records importing in seconds and CPU limits of 5 seconds pretty close. Hopefully as this is beta this will get resolved over time.